Mantis Cloud Storage Infrastructure

In a nutshell

To use Mantis, you must run only once, on head nodes of each cluster you want to use:

source /applis/site/nix.sh

/applis/site/mantis_init.sh

and, in order to keep your authentication token active for 90 days :

source /applis/site/nix.sh

iinit --ttl 2160

The maximum ttl is 8600 (about one year)

Access from command line clusters is done with iCommands (ils, iput, iget…) which are packaged with Nix , so you’ll have to source this each time:

source /applis/site/nix.sh

Mantis

Mantis is a distributed storage cloud iRODS available from all compute nodes in the GRICAD HPC infrastructure. It is an efficient way to store input data or results of computing jobs (stage-in/stage-out). It is powerful (as the storage is distributed among several nodes), capacitive and scalable (labs can easily contribute to expand the storage capacity by adding new 250TB nodes) and provides a programmable rules engine. Access and data throughput are not as fast as on other infrastructures such as Bettik, but it provides a point to load data before running a computational job and store the final output.

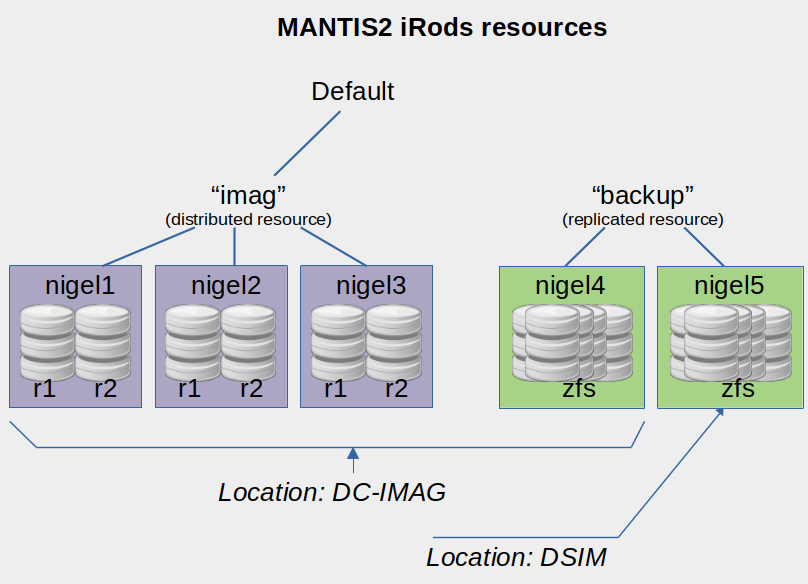

Mantis provides “standard” resources and “backup” resources. The former are grouped

into imag resources. These are the default working resources, providing efficient

distribution over files. The latter are grouped in the backup resource, which

must be called explicitly. This resource provides automatic replication across two

very large (but slow) ZFS file systems located in two different data centers.

In May 2022, the standard resources offer 750 TB of capacity, and the backup

resources offer 2 times 1.5 PB of capacity.

Most storage infrastructures, especially cloud storage, don’t handle large

collections of small files very well and this is especially true of iRODS. It is

far better to make a large file package with a tool like tar before uploading it

to Mantis and unpacking the package on the compute node after it is uploaded rather than uploading a large

collection of small files. Since each file transaction (read, write, copy, move)

generates many associated database transactions in iRODS, the overhead on the file

operation itself can quickly become very large and negatively affect the processing

time of your compute jobs.

Access

Currently, access to Mantis storage is provided from the Dahu, Bigfoot, Luke and Ceciccluster clusters. All users who have a PERSEUS account automatically have storage space in Mantis.

Use of this infrastructure is subject to acceptance of the charter.

Initialization

Before using Mantis storage for the first time, you must initialize a configuration directory in your home directory on each compute cluster. To do this, please run :

/applis/site/mantis_init.sh

This will create an .irods directory with a default configuration and ask you for

your PERSEUS password to create an authentication token. This is a one-time

process.

To check that everything is working properly, use the ilsresc command which gives

you the current list of resources:

mylogin@cluster:~$ ilsresc

backup:replication

├── nigel4-zfs:unixfilesystem

└── nigel5-zfs:unixfilesystem

imag:random

├── nigel1-r1-pt:passthru

│└── nigel1-r1:unixfilesystem

├── nigel1-r2-pt:passthru

│└── nigel1-r2:unixfilesystem

├── nigel2-r1-pt:passthru

│└── nigel2-r1:unixfilesystem

├── nigel2-r2-pt:passthru

│└── nigel2-r2:unixfilesystem

├── nigel3-r1-pt:passthru

│└── nigel3-r1:unixfilesystem

└── nigel3-r2-pt:passthru

└── nigel3-r2:unixfilesystem

mantis-cargoResource:unixfilesystem

Authentication

If your authentication token has expired, you may get the following error:

failed with error -826000 CAT_INVALID_AUTHENTICATION

The solution is to re-authenticate with the iinit command from any GRICAD

computing machine and provide your GRICAD PERSEUS password:

mylogin@cluster:~$ iinit --ttl 2160

Enter your current PAM password:

mylogin@cluster:~$

The lifetime of the authentication token is 90 days (2160 hours) as the –ttl 2160 option is passed. This means that you should use iinit again at least after 90 days if you still want to use the service.

Quotas

By default, a quota of 100 TB is set for each user. If you need more space, please open a ticket on the SOS-GRICAD helpdesk or by writing to sos-calcul-gricad.

Clients

iCommands

To access Mantis via the command line, you must use the iCommands clients. They are available as a Nix package that is installed by default when you source the Nix environment:

source /applis/site/nix.sh

The common iCommands are: ils (to list files), iput (to upload files), iget (to download files). See the irods documentation for more information.

Some examples:

# Téléverser un fichier depuis un répertoire Bettik de Dahu:

username@f-dahu:/bettik/PROJECTS/pr-projectname/username$ iput 10GBtestfile

# Lister les fichiers

username@f-dahu:/bettik/PROJECTS/pr-projectname/username$ ils

/mantis/home/username:

10GBtestfile

# Lister les fichiers avec les détails

username@f-dahu:/bettik/PROJECTS/pr-projectname/username$ ils -l

/mantis/home/username:

username 0 imag;nigel1-r2-pt;nigel1-r2 10485760000 2022-06-27.16:14 & 10GBtestfile

username@f-dahu:/bettik/PROJECTS/pr-projectname/username$ rm 10GBtestfile

# Téléchargement d'un fichier

username@f-dahu:/bettik/PROJECTS/pr-projectname/username$ iget 10GBtestfile

# Création d'une collection (un répertoire)

username@f-dahu:/bettik/PROJECTS/pr-projectname/username$ imkdir images

username@f-dahu:/bettik/PROJECTS/pr-projectname/username$ iput stickers.jpg

# Effacement d'un fichier

username@f-dahu:/bettik/PROJECTS/pr-projectname/username$ irm stickers.jpg

# Téléversement d'un fichier dans une collection

username@f-dahu:/bettik/PROJECTS/pr-projectname/username$ iput stickers.jpg images

username@f-dahu:/bettik/PROJECTS/pr-projectname/username$ ils

/mantis/home/username:

10GBtestfile

C- /mantis/home/username/images

username@f-dahu:/bettik/PROJECTS/pr-projectname/username$ ils images

/mantis/home/username/images:

stickers.jpg

username@f-dahu:/bettik/PROJECTS/pr-projectname/username$

# Calculer la taille d'une collection récursivement en octets

$ iquest "SELECT SUM(DATA_SIZE) WHERE COLL_NAME LIKE '/mantis/home/username/images%'"

DATA_SIZE = 1015834187376

# Calculer et afficher les checksum des fichiers d'une collection

$ ichksum images

C- /mantis/home/bzizou/images:

ngc7000.jpg sha2:2T6SMIUxiFqaJ6I+XqnyWSRDTDRzlGooc2FEEndpMfA=

stickers.jpg sha2:9xzvtX7XEQda4FMOUPw7JYjTUdEEaKTHnxK4YfmEp80=

Note: if you don’t want to load the Nix environment, be sure to add

/nix/var/nix/profiles/default/bin to your PATH environment variable (export PATH=/nix/var/nix/profiles/default/bin:$PATH)

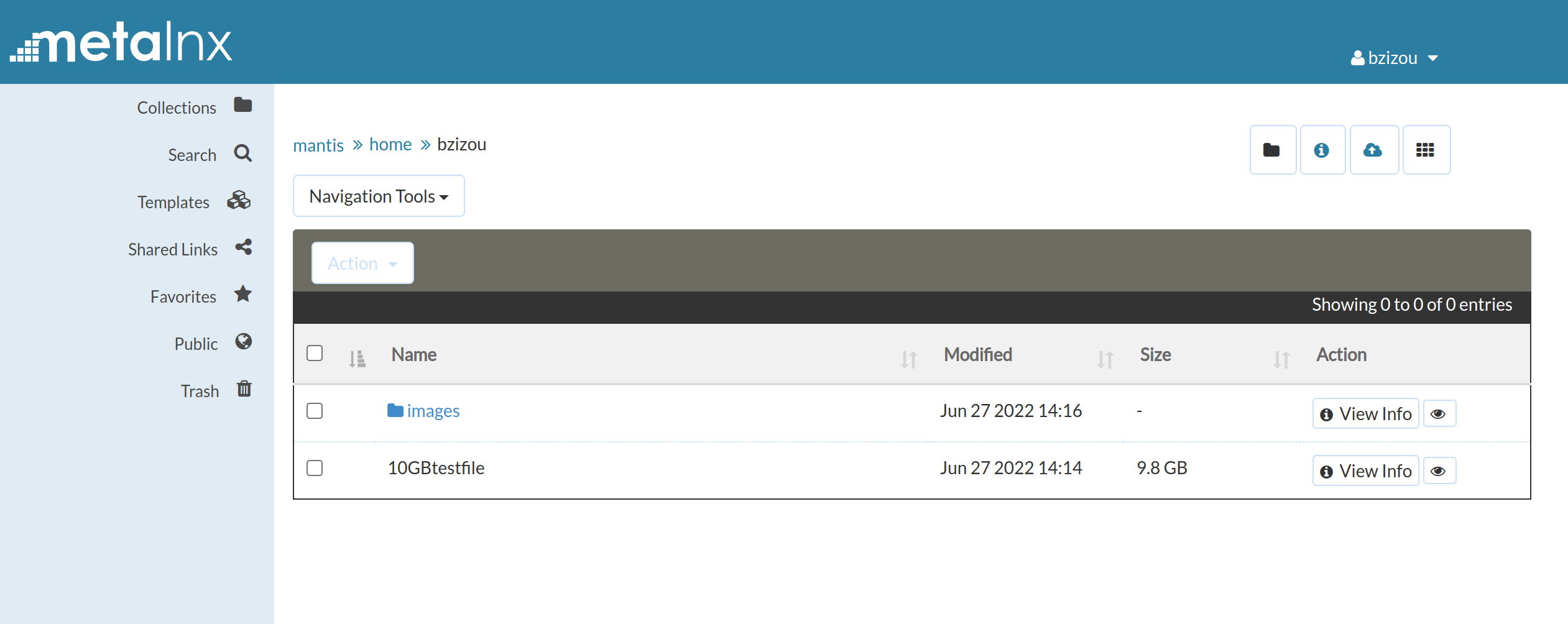

Web interface

The Metalnx web interface allows you to browse and manage your files from a web browser.

The Mantis Metalnx interface is accessible here: Metalnx

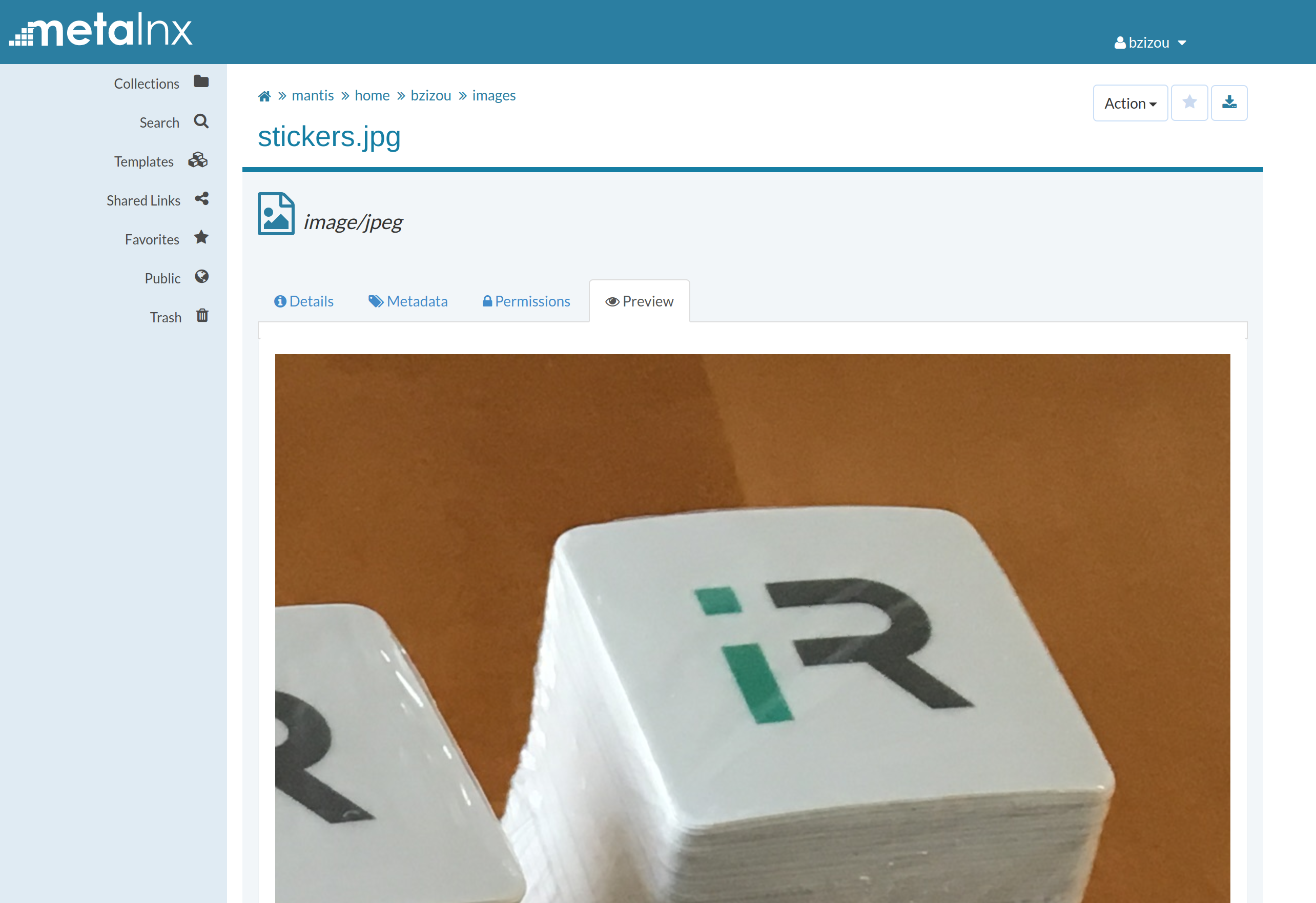

This interface also allows you to search for files by querying the metadata, and to

display a preview of the images.

This interface also allows you to search for files by querying the metadata, and to

display a preview of the images.

The Metalnx interface is useful for browsing, but if you have to download or upload big files, be aware that the performance is poor. So, we strongly recommend the use of the following webdav protocol if you need to transfer files from locations where you don’t have access to the icommands.

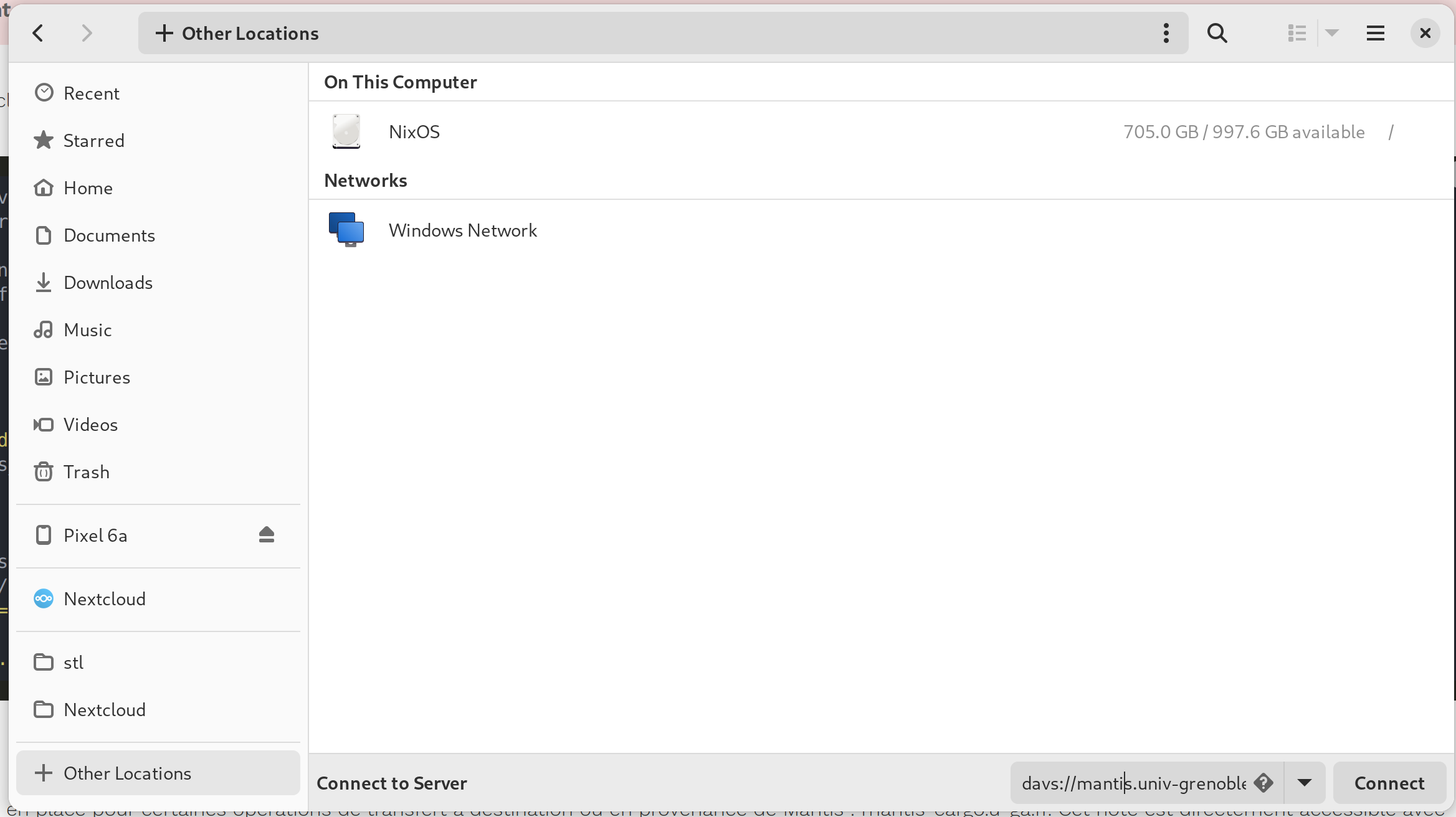

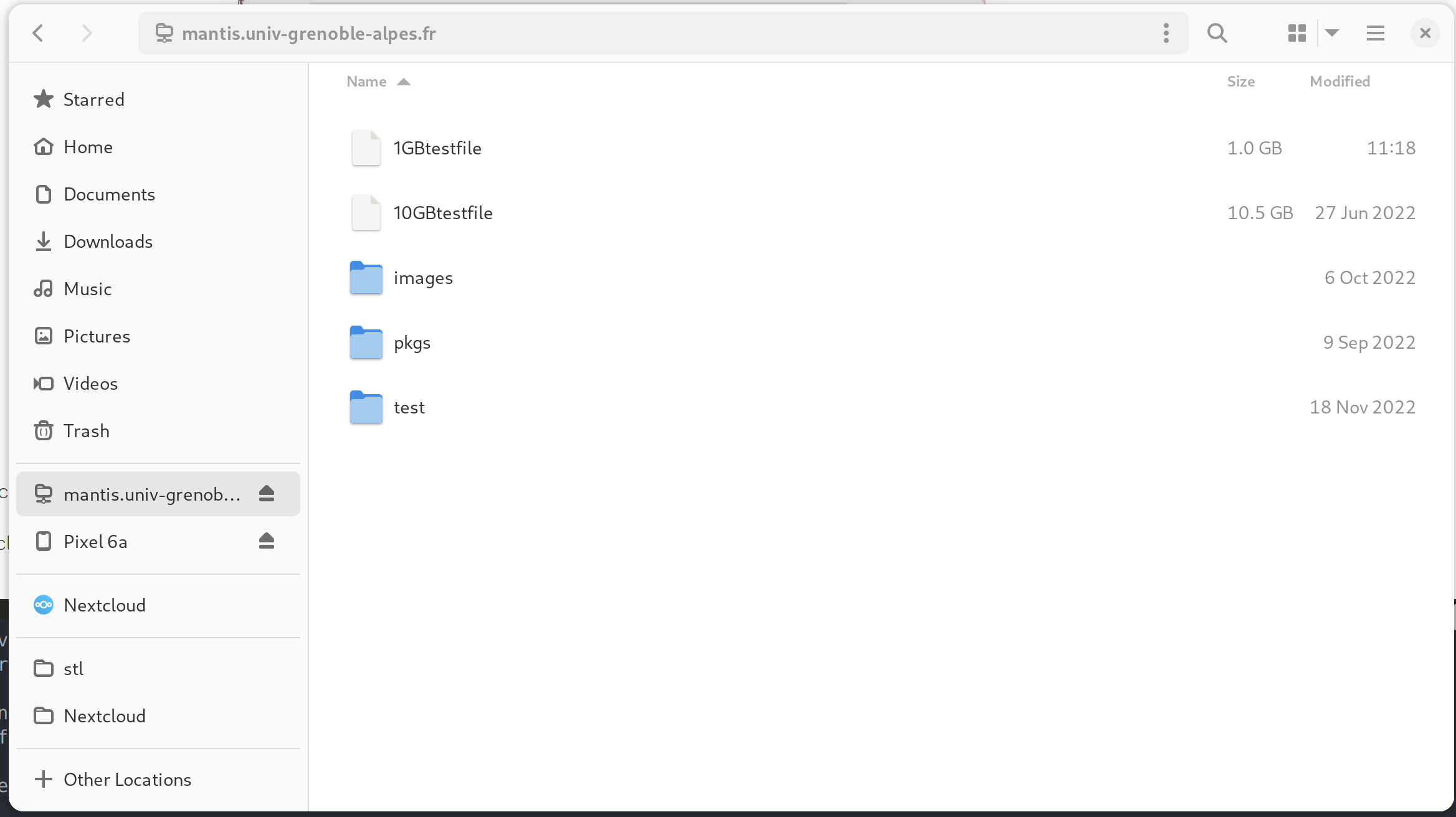

Webdav protocol

The webdav protocol can be useful as a gateway to the outside world to Mantis cloud storage. All you need is a webdav client and to connect it to the following URL:

https://mantis.univ-grenoble-alpes.fr/davrods/

Or, depending on the tool:

davs://mantis.univ-grenoble-alpes.fr/davrods/

The final slash is important

Sample session with Gnome File Manager:

You just have to enter the connexion string davs://mantis.univ-grenoble-alpes.fr/davrods/ into the “Other locations” zone.

Example of a session with the Linux command line client cadaver:

[bzizou@bart:~]$ cadaver https://mantis.univ-grenoble-alpes.fr/davrods/

WARNING: Untrusted server certificate presented for `mantis.univ-grenoble-alpes.fr':

Issued to: Université Grenoble Alpes, 621 avenue Centrale, Saint-Martin-d'Hères, Auvergne-Rhône-Alpes, 38400, FR

Issued by: GEANT Vereniging, NL

Certificate is valid from Tue, 15 Dec 2020 00:00:00 GMT to Wed, 15 Dec 2021 23:59:59 GMT

Do you wish to accept the certificate? (y/n) y

Authentication required for DAV on server `mantis.univ-grenoble-alpes.fr':

Username: bzizou

Password:

dav:/davrods/> ls

Listing collection `/davrods/': succeeded.

Coll: povray_results_mantis2 0 Jun 3 2020

Coll: system 0 Jun 30 16:48

10GBtestfile 10485760000 Nov 13 14:26

test1 161886424 Aug 26 15:08

dav:/davrods/> get test1

Downloading `/davrods/test1' to test1:

Progress: [=============================>] 100.0% of 161886424 bytes succeeded.

dav:/davrods/> quit

Connection to `mantis.univ-grenoble-alpes.fr' closed.

Public read-only https gateway

You can open your files to the outside world by setting up a URL for direct access to your files. This enables you to share files with external collaborators who do not necessarily have a Perseus account. To do this, you must first request, via the [SOS-GRICAD] helpdesk (https://sos-gricad.univ-grenoble-alpes.fr/ “SOS-GRICAD”), a Mantis public access account, specifying:

- A name of no more than 10 characters, in lower case

- The list of irods collections you wish to publish

We’ll then provide you with a login in the form http- and a secret that will enable you to build a URL that you can publish. This URL will take the following form:

https://mantis.univ-grenoble-alpes.fr/getfile?user=http-name&secret=secret&path=/mantis/file_path_to_download

Here’s a demo of a publicly shared file stored on Mantis:

Cargo

A specific host has been set up for certain transfer operations to and from Mantis: mantis-cargo.u-ga.fr. This host is directly accessible with your PERSEUS account and provides iCommands clients by default. This “Cargo” host also provides a large temporary local directory for transfer operations (located in /cargo). Two specific scripts have been implemented to allow irods registration or centralized replication:

~$ cireg.py -h

Usage: cireg.py [options]

Options:

-h, --help show this help message and exit

-c COLLECTION, --collection=COLLECTION

Full path of the directory to register as an irods

collection

-y, --yes Answer yes to all questions

-K, --checksum Calculate a checksum on the iRODS server and store

with the file details

-v, --verbose Be verbose

~$ cipull.py -h

Usage: cipull.py [options]

Options:

-h, --help show this help message and exit

-c COLLECTION, --collection=COLLECTION

Full path of the collection to centralize

-y, --yes Answer yes to all questions

-v, --verbose Be verbose

Direct remote access

Direct remote access from the machines in the research laboratories is possible, in order to allow users greater flexibility in the management of their computer data (initial generation of data directly in the infrastructure, downloading of data produced by a piece of equipment, downloading of the output of calculation jobs, etc.). The implementation of these accesses is not trivial, since it requires opening breaches in various firewalls, to machines with a fixed IP address. In order to set up and optimize these accesses, a case by case evaluation must be requested. To do so, please contact us, either by email at [sos-calcul- gricad](mailto:sos- calcul-gricad@univ-grenoble-alpes.fr) or via the [SOS-GRICAD helpdesk](https://sos- gricad.univ-grenoble-alpes.fr).

Backup Resources

Introduction

Mantis provides special high capacity resources to make backup copies of your sensitive files, or files you want to archive in the medium term, or to make some space on the cluster scratch for a certain period. These resources are replicated in two different data centers, so that you always have a copy in case of a big crash (flood, fire, …). Backup resources are much more capable than “standard” resources, but they are also much slower! They are just there to host backup copies of your files, but not to be accessed intensively from the compute nodes, so please use themwith care, and prefer to do a replication (called “stage-in”) of your files on the default resources if you plan to do intensive accesses again.

A “backup copy” is not a “full backup”. This means that it does not prevent human errors like “oops, I deleted the files, can I recover them?” No, you will not be able to restore files deleted by you! A backup copy prevents you from losing your original files if the original system crashes, for example. It allows you to make room on a scratch file system. But it is not a versioned backup with a retention period. *There is no retention!

Backup resources are “slow”. This is the price of having very big capacitive resources.

To prevent overflow of data on those slow resources, we highly recommend the use the -N 0

option of the iput and irepl commands when uploading files to the backup resources.

321 rule

There is a rule that says that if you want to be safe from data loss, you should have 3 copies of your files, on 2 different types of storage and 1 copy offsite. If your original files are on a different storage (e.g. Bettik or Summer), you make a 321 combination by simply uploading your files to the mantis2 backup resources, as these resources are replicated to 2 different physical sites.

Usage

The backup resources currently consist of nigel4-zfs and nigel5-zfs under the name backup:

$ ilsresc backup

backup:replication

├── nigel4-zfs:unixfilesystem

└── nigel5-zfs:unixfilesystem

For information, nigel-4 is located in the IMAG building and nigel-5 in the DSIM building.

There are basically two different ways to use Mantis2 backup resources: you can

replicate files that are already hosted by Mantis, or you can upload directly to

the backup resources. In both cases, you don’t have to worry about replication on

the two different sites: Mantis automatically creates both replicas.

Be aware that in the first case, if you don’t already have a copy outside of

Mantis, you don’t fully meet the 321 rule since you store

3 copies on a single file system type when at least 2 different types are needed.

Replication of files that are already hosted by Mantis

Suppose you have already uploaded a my_files collection to Mantis, you can secure

your data by replicating it to the backup resource, using the irepl command:

irepl -N 0 -r my_files -R backup

You can check if a file from this collection has been correctly replicated on the 2 backup resources:

$ ils -l my_files/biology/quast

/mantis/home/username/my_files/biology/quast:

username 0 imag;nigel2-r1-pt;nigel2-r1 1623 2022-05-31.11:04 & default.nix

username 1 backup;nigel5-zfs 1623 2022-05-31.11:05 & default.nix

username 2 backup;nigel4-zfs 1623 2022-05-31.11:05 & default.nix

If you already have the original files elsewhere (recommended to satisfy rule 321),

you can delete the first replica (the one with id 0) to free up space on the

Mantis imag resources, using the itrim command:

$ itrim -n 0 -r my_files

Total size trimmed = 0.125 MB. Number of files trimmed = 108.

$ ils -l my_files/biology/quast

/mantis/home/username/my_files/biology/quast:

username 1 backup;nigel5-zfs 1623 2022-05-31.11:05 & default.nix

username 2 backup;nigel4-zfs 1623 2022-05-31.11:05 & default.nix

Note that your files are always available for direct download:

iget my_files/biology/quast/default.nix

The only difference is that backup resources are not as fast as “imag” resources, so you may have to replicate to them:

irepl -r my_files -R imag

You can delete an entire collection and any of its replicated files (even those on backup resources!):

# WARNING: no way back!

$ irm -r my_files

Copy files directly to backup resources

You can upload files directly to the backup resources, and get 2 replicas of your

files:

$ imkdir my_files

$ iput -N 0 -r biology my_files -R backup

Check the replicated copy:

$ ils -l my_files/biology/astral/mantis/home/username/my_files/biology/astral:

username0 backup;nigel5-zfs1081 2022-05-31.11:28 & default.nix

username1 backup;nigel4-zfs1081 2022-05-31.11:28 & default.nix

Note that your files are always available for direct download:

iget my_files/biology/quast/default.nix

The only difference is that backup resources are not as fast as “imag” resources, so you may have to replicate them:

irepl -r my_files -R imag

You can delete an entire collection and any of its replicated files (even those on backup resources!):

# WARNING: no turning back possible!

$ irm -r my_files

Data consistency and use of checksums

Mantis2 backup resources are 2 replicated resources in 2 different data centers.

Replication is automatic but it does not guarantee data consistency! This means

that in some very rare cases, a site failure could cause a replica to be out of

sync if you perform an update.

It is therefore recommended to use checksums. A checksum is a unique signature that

is calculated when a file is transferred and stored as file metadata. It allows

future comparisons to verify the consistency of the file. It is also a good way to

know if the transferred file is exactly the same as the source file (no alteration

of data during transfer).

Checksums are not calculated by default, as this takes time and resources. We

recommend that you use the -K option when using iput to upload files. This

option calculates a checksum on the source file, uploads the file, then calculates

the checksum on the destination resource (which will be stored for later checking),

and compares whether the 2 checksums are identical:

$ iput -N 0 -K -r biology my_files -R backup

Advanced rules

Mantis provides some customizations, which we have implemented for some use cases from the GRICAD community. Feel free to use them or to ask for other customizations…

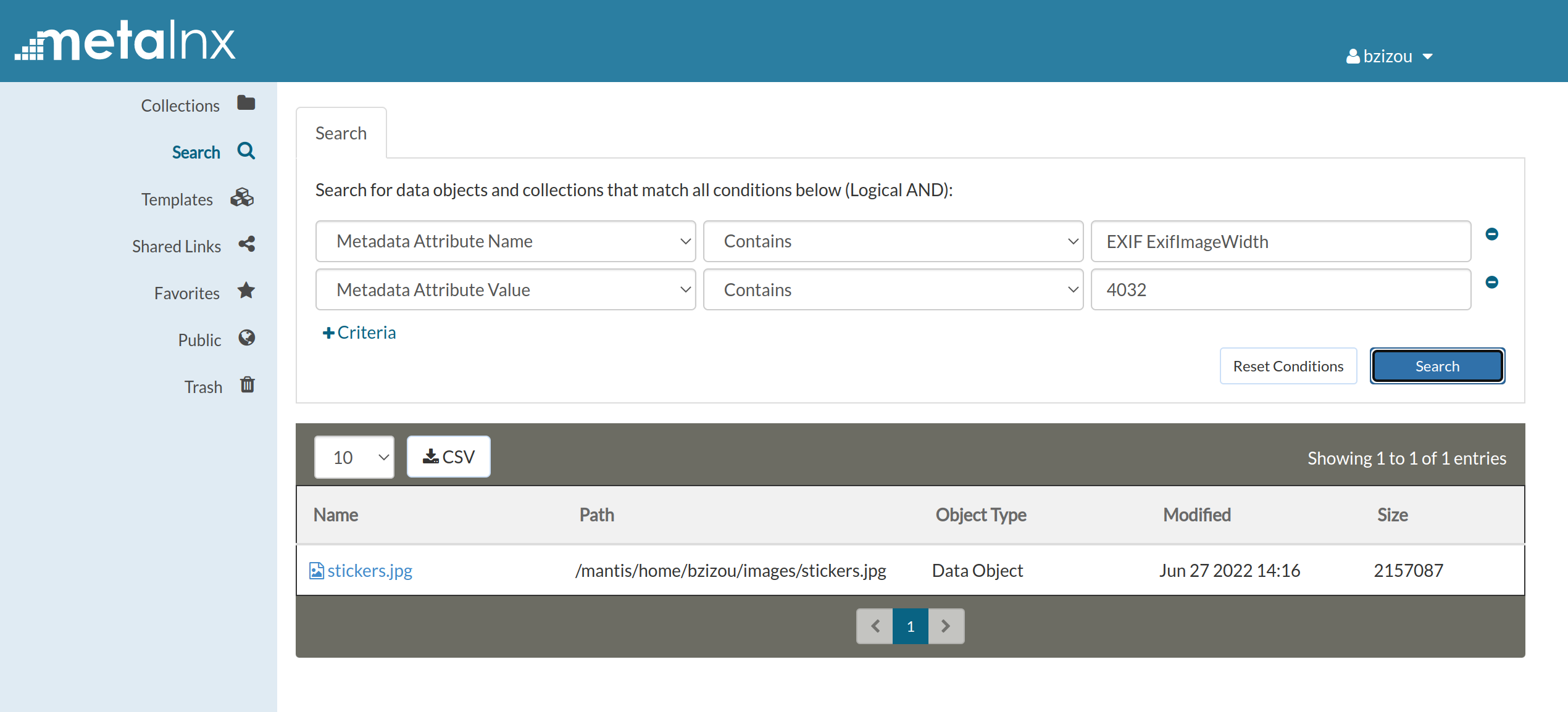

Importing EXIF information from images into the metadata

When you upload .JPG (or .jpg) files containing EXIF information, this data is automatically converted to irods metadata, allowing you to perform queries using it. Here is an example:

# Print metadata of the previously uploaded "stickers.jpg" image

$ imeta ls -d stickers.jpg

AVUs defined for dataObj /mantis/home/bzizou/stickers.jpg:

attribute: EXIF ApertureValue

value: 7983/3509

units:

----

attribute: EXIF BrightnessValue

value: 2632/897

units:

----

[...]

# Obtenir toutes les images de plus de 4000 pixels

$ imeta qu -d "EXIF ExifImageWidth" ">" "4000"

For advanced use of metadata, we recommend the python module python-irodsclient.

Automatic creation of image thumbnails

When you create a subcollection with the name thumbnails and put images in the

parent collection, you automatically get small 600x600 versions of the images in

this thumbnails subcollection:

$ imkdir images

$ imkdir images/thumbnails

$ iput stickers.jpg images

$ ils -l images/stickers.jpg

bzizou 0 imag;nigel1-r1-pt;nigel1-r1 2157087 2022-05-31.15:52 & stickers.jpg

$ ils -l images/thumbnails

/mantis/home/bzizou/images/thumbnails:

bzizou 0 imag;nigel3-r1-pt;nigel3-r1 98861 2022-05-31.15:52 & stickers.jpg

OAR job trigger file

You can trigger an OAR job on a GRICAD cluster by simply putting a file (even an

empty one) with a name like this:

<script>_<cluster>_oar-autosubmit

- The script with the name

<script>must be an OAR script located in amantis/directory in your home on the cluster. - The oar job is submitted on the

<cluster>cluster (currently onlybigfoot.u- ga.fris supported) - The oar job is submitted with the full iRods path of the

<script>_<cluster>_oar-autosubmitgiven as parameter.

Example:

$ # From the bigfoot.u-ga.fr frontend, set up a OAR script

$ mkdir ~/mantis

$ echo > ~mantis/orchamp.oar <<EOF

#!/bin/bash

#OAR -l /nodes=1/gpu=1,walltime=00:05:00

#OAR -p gpumodel='A100' or gpumodel='V100'

#OAR --project orchampvision

echo $1

nividia-smi -L

EOF

$ chmod 755 ~/mantis/orchamp.oar

$ # suppose you have some input files into the "test_jobs" collection on mantis

$ touch orchamp.oar_bigfoot.u-ga.fr_oar-autosubmit

$ iput orchamp.oar_bigfoot.u-ga.fr_oar-autosubmit test_jobs/

$ # check your jobs

$ oarstat -u

$ oarstat -fj <id>

You should see a new job running the command

command = ./mantis/orchamp.oar /mantis/home/username/test_jobs/orchamp.oar_bigfoot.u-ga.en_oar-autosubmit.

Mantis Charter

Service offered by GRICAD/CIMENT

GRICAD/CIMENT offers its users a distributed storage system accessible from all major GRICAD/CIMENT computing clusters. The core [1] of this system is mainly intended for users in “Grid” mode (mainly CiGri), i.e. users who run computational jobs involving several platforms simultaneously. However, accesses outside the “Grid” mode are also possible, in particular when a laboratory has contributed to the extension of the system or for archiving in order to reduce the load on the local disk systems.

[1]: the “core” corresponds to the initial volume of GRICAD/CIMENT financed using internal funds for about 750TB.

Quotas

All users with a PERSEUS account have a default storage space of 100 TB on Mantis in order to simplify access and to allow testing of the solution. For any request of extension of this quota, please contact us, either by email at sos-calcul-gricad, or via the helpdesk SOS-GRICAD.

Participation in capacity building

As users, you can participate in increasing the capacity of the infrastructure. You can contribute, through your laboratory and your projects, to the purchase of equipment that will be added to the existing park. The purchased material will be added to the existing capacity without distinction in order to increase the overall capacity. However, users or groups who have contributed will have their quota increased by the amount of their net contribution. Contributing users and groups will be able to use the infrastructure without necessarily using the grid mode.

Caveats

No data backups are performed by GRICAD, although Mantis offers users the possibility to manage replicated “backup copies”. The infrastructure is designed to withstand minor hardware failures, but we cannot be held responsible for the loss of data stored on the infrastructure in case of a major hardware failure. We also remind you that infrastructure resilience does not make it a backup. Unlike a backup system, it does not prevent the loss of data due to a handling error or data corruption caused by software. Data security is ensured within the capacity of the middleware. As a user, you have control over the access rules to data stored on the infrastructure. As such, GRICAD cannot be held responsible for the loss or theft of data through misuse of the data access rules mechanisms or in case of system malfunction.

User engagement

- Users are bound by the Charter for the use of UGA computer facilities.

- Only data closely related to the research project for which they have access to the infrastructure will be stored.

- Users must delete all obsolete data to free up space at the global level, regardless of their quota usage.

- Users should report any suspicious malfunctions tosupport by mail to sos-calcul-gricad or through the [helpdesk SOS-GRICAD](https://sos-gricad.univ- grenoble-alpes.fr/).

- Users with sensitive data requiring special protection measures should let the support staff know by mail at sos-calcul-gricad or via the SOS-GRICAD helpdesk so that appropriate solutions can be considered and to be properly informed of the level of protection granted